This lesson expands text and file tooling. It shows how to search the filesystem with find, chain work with xargs, edit text streams and files with sed, and report on structured data with awk. The focus is safe patterns that work on real projects.

find: locate files or directories by name, size, time, or typexargs: convert a stream of names into argument lists for a commandsed: non interactive editing and search or replaceawk: field based processing and quick reports

Prerequisites

- Day 12 completed

- A project folder with sample files, or the provided playground

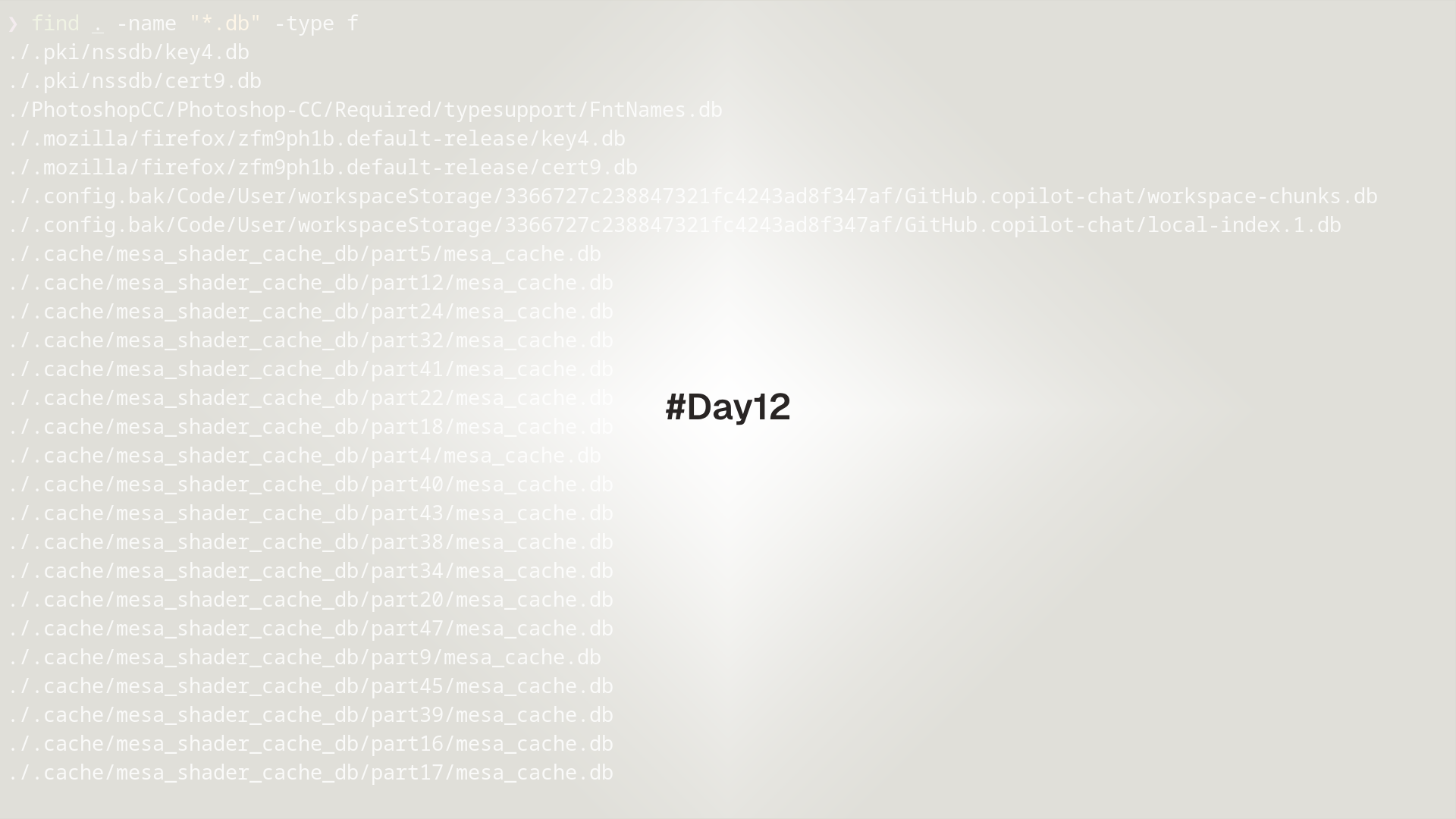

find basics

# by name and type

find . -type f -name "*.log"

# case insensitive name search

find src -type f -iname "*.md"

# by size and modification time

find /var/log -type f -size +50M -mtime -7 -printf '%p %s bytes\n'

# limit depth

find . -maxdepth 2 -type d -name build

# exclude directories

find . -type f -name "*.py" -not -path "*/venv/*"Useful tests and actions:

-type f|d|lfile, directory, symlink-nameand-inamefor case insensitive-mtime -7modified in last 7 days,-mmin -60modified in last hour-size +100Mlarger than 100 MiB-print0with NUL separators for safe piping-deleteto remove matches, used only after testing with-print

Use -xdev to avoid descending into mounted filesystems like backups or network shares when running cleanup jobs.

xargs for safe bulk actions

xargs builds command lines from standard input. Combine with -print0 to handle spaces and special characters.

# remove editor swap files safely

find . -type f -name "*.swp" -print0 | xargs -0 -r rm -v

# compress large logs in place

find /var/log -type f -name "*.log" -size +50M -print0 | xargs -0 -r gzip -v

# run a command once per file using {}

find images -type f -name "*.png" -print0 | xargs -0 -I{} -r echo "Converting {}"Flags used:

-0read NUL separated input-rdo nothing on empty input-I{}replace occurrences of{}in the command template

Replace the final command with printf '%s\n' to print file names before running destructive actions.

sed for search and replace

sed edits streams and files. The most common task is search and replace.

# replace a word in a file and print to stdout

sed 's/error/warning/g' app.log | head

# in place with backup

sed -i.bak 's/http:\/\//https:\/\//g' site/config.ini

# replace only on lines that match a filter

sed '/^#.*TODO/ s/TODO/DONE/' notes.txt

# remove blank lines

sed '/^$/d' README.mdUse a different delimiter when slashes are noisy.

sed -i 's|/var/www|/srv/www|g' nginx.confsed is line oriented and byte based. For binary files or complex encodings, choose a specialized tool. Always keep backups when running in place edits on important data.

awk for fields and quick reports

awk reads lines, splits them into fields, and runs small programs written as patterns and actions.

# print the first and third fields of a CSV

echo 'user,action,duration' | awk -F, '{print $1, $3}'

# sum a numeric field grouped by a key

awk -F, 'NR>1 {sum[$2]+=$3} END {for (k in sum) printf "%s,%d\n", k, sum[k]}' data.csv | sort

# filter by status and compute average duration

awk '$9 ~ /^5../ {n++; s+=$NF} END {if (n) printf "avg=%0.2f\n", s/n}' access.tsvNotes:

-Fsets the field delimiter$1,$2,$3refer to fields,$0is the whole lineNRis the current line number,NFis the number of fieldsBEGINandENDblocks run before and after records

Pretty table from delimited input:

awk -F, 'BEGIN{printf "%-10s %-10s %-10s\n", "user","action","ms"} NR>1{printf "%-10s %-10s %-10s\n", $1,$2,$3}' data.csvWhen printing floats in awk, set a format string with printf to control decimal places. Example printf "%0.2f".

Project wide search and replace

# change a config key across many files, preview first

rg -n "^api_url=" 2>/dev/null || grep -R -n "^api_url=" .

# safe in place edit with backups

find . -type f -name "*.env" -print0 \

| xargs -0 -r sed -i.bak 's|^API_URL=.*|API_URL=https://api.example.com|'Mass rename with rename when available, or with a small bash loop.

# rename JPG to jpg using perl rename

rename 's/\.JPG$/.jpg/' *.JPG 2>/dev/null || true

# portable loop fallback

for f in *.JPG; do mv -v -- "$f" "${f%.JPG}.jpg"; doneUse version control or make .bak files before bulk edits. Test on a small subset first.

CSV and log summaries

# CSV: total and average by action

awk -F, 'NR>1{sum[$2]+=$3; cnt[$2]++} END{for(k in sum) printf "%s,%.2f\n", k, sum[k]/cnt[k]}' data.csv | sort

# Nginx access log: count status codes

awk '{c[$9]++} END{for(k in c) printf "%s %d\n", k, c[k]}' /var/log/nginx/access.log 2>/dev/null | sort -nr

# Auth log: top source IPs for failures

grep -E "Failed password|authentication failure" /var/log/auth.log 2>/dev/null \

| grep -oE "\b([0-9]{1,3}\.){3}[0-9]{1,3}\b" \

| sort | uniq -c | sort -nr | headPractical lab

- Prepare a playground with mixed files.

mkdir -p ~/playground/day13/{logs,src,conf}

printf "alpha\n\nbeta\n" > ~/playground/day13/src/readme.md

printf "2025-10-03 user=alice time_ms=120\n2025-10-03 user=bob time_ms=80\n" > ~/playground/day13/logs/app.log

cp /etc/hosts ~/playground/day13/conf/hosts.sample 2>/dev/null || true- Find all

.samplefiles and copy them without the suffix.

cd ~/playground/day13

find conf -type f -name "*.sample" -print0 | xargs -0 -I{} sh -c 'cp -v "{}" "${1%.sample}"' _ {}- Change a config key across

.samplefiles.

find conf -type f -name "*.sample" -print0 | xargs -0 sed -i.bak 's/^HOSTNAME=.*/HOSTNAME=demo.local/'- Summarize log timing by user with

awk.

awk '{for(i=1;i<=NF;i++){if($i~/^user=/)u=substr($i,6); if($i~/^time_ms=/)t=substr($i,9)}} {sum[u]+=t; cnt[u]++} END{for(k in sum) printf "%s %.2f\n", k, sum[k]/cnt[k]}' logs/app.log | sort- Remove empty lines from markdown under

src/.

find src -type f -name "*.md" -print0 | xargs -0 sed -i.bak '/^$/d'Troubleshooting

Argument list too longwhen passing many files. Usefind ... -print0 | xargs -0or-exec ... +to batch arguments.- Files with spaces break commands. Always prefer NUL safe pairs:

-print0withxargs -0. sed -iis different on macOS and GNU sed. Use a backup suffix for portability, for example-i.bak.awkfield splits look wrong. Set the correct delimiter with-Fand check that lines do not contain embedded commas or spaces in quoted fields.- Bulk edits modify generated or vendor files. Exclude directories with

-not -pathinfindor--exclude-diringrep -R.

Next steps

Day 14 covers shell scripting basics. It introduces writing reusable scripts with arguments, exit codes, functions, set -euo pipefail, strict mode, and logging. It ends with a small script that wraps a multi step workflow from today.